As I wrap up a role in which developed a Customer Experience [CX] data listening program, I wanted to share a few of the learnings surfacing as I write a playbook on my exit.

Firstly, the purpose of a CX program is to get evidence to inform meaningful enhancements to digital and physical experiences. We start by identifying questions that matter to the business as well as to developing a genuine understanding of people’s experiences and their overall relationship to the brand or service or company.

For example: What do our users want to do that we aren’t enabling them to, today? Why don’t people complete this flow in this channel? Or, how do our customers feel about us, and what impacts that?

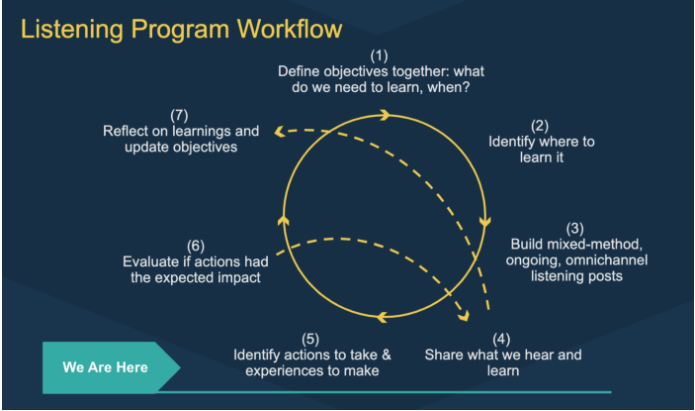

To operationalize this program, you’ll then source [or create!] corresponding data to answer these questions, make sense of it, and share it widely to influence decisions. Then, you’ll go back to your questions, and see if they’re enabling enhancements.

What I learned designing a CXL program:

- Design and test a program with one pilot, then iterate, test again, THEN scale. Pro-tip: while it’s ideal to start low-stakes, it’s not likely. When teams have a learning need, it’s because it matters. Just make sure you build in a way you can easily re-build later, and communicate that this is the plan.

- Priorities shift — make sure you have built-in moments to ensure you’re learning information that’s relevant to the current priorities, and to pivot if you’re not

- New technologies and capabilities will expand the information available — work with what you have today and prepare to expand tomorrow. In version 1 of a recent CX program, the data maturity meant I maintained a spreadsheet that extended to column BO with behavior data from across many silos, scraped from many PDF tables. It enabled great insights! And now, there’s automated programs doing that work and it was a pleasure to sunset it.

- There are two program versions — the ones staff running them see, and the simpler one stakeholders see. This is important because making work visible to the right people matters, as does simplifying wherever possible.

- Having an editable, visual playbook [eg a Miro/Mural] shows the way while keeping it agile. I lean heavily on service blueprints as a collaboration tool, for teaching new colleagues what to expect, and to identify gaps and moments we could improve on for iteration purposes. Your program is used by people, keep it alive so it can change as their needs change.

- Measuring KPIs adds value. If I had a re-do, I would define and measure simple internal metrics asap, so I could learn if these were meaningful — and measurable! immediately. The first year in this program, in an effort to be agile, I backfilled measurement EOY, and had too many measurements in mind. We learned which ones mattered to stakeholders, but could have saved time on the approach if we’d done it as we went along. #alwaysbelearning

- Actions trump data, and outcomes trump actions — the point of a research program is to take action on what you learn. So: you learned where there’s friction, falloff, or can’t validate a hypothesis about behavior. Without taking an action — be it testing a new interface experience, adjusting the order of moments in a service, or just having a solid backlog to create evidence for future decisions — your learnings are for naught. Further, you can optimize any experience, but if it doesn’t move needles that matter it’s still not a win for users or stakeholders. Data can help us know what’s not working, as well as if it matters. Working on the right thing is what ultimately creates change.

- Create simple process visuals and socialize them — once we got to Iteration 2 of the program, a visual helped communicate both what was happening, and where stakeholders and researchers were in the circular process. Here’s the second iteration of ours:

Developing any program takes persistence and should deliver learnings — not just from the methods, and measurements, but also as related to programmatic design itself.

**

With thanks to the Valley Bank Research & Service Design team — and all the many people who took the time to listen to CX, and to the even more people who shared about their experiences.